To researchers, artificial intelligence is a reasonably old innovation. The last years has actually seen substantial development, both as an outcome of brand-new strategies– back proliferation & & deep knowing, and the transformers algorithm– and enormous financial investment of economic sector resources, particularly calculating power. The outcome has actually been the striking and extremely publicised success of big language designs.

However this fast development presents a paradox– for all the technical advances over the last years, the influence on efficiency development has actually been undetected. The efficiency stagnancy that has actually been such a function of the last years and a half continues, with all the unhealthy impacts that produces in flat-lining living requirements and tough public financial resources. The scenario is similar to an earlier, 1987, remark by the financial expert Robert Solow: ” You can see the computer system age all over however in the efficiency stats.”

There are 2 possible resolutions of this brand-new Solow paradox– one positive, one cynical. The pessimist’s view is that, in regards to development, the low-hanging fruit has actually currently been taken. In this viewpoint– most notoriously mentioned by Robert Gordon— today’s developments are really less financially considerable than developments of previous ages. Compared to electrical power, Fordist producing systems, mass individual movement, prescription antibiotics, and telecoms, to offer simply a couple of examples, even expert system is just of 2nd order significance.

To include more to the pessimism, there is a growing sense that the procedure of development itself is struggling with lessening returns– in the words of a popular current paper: “ Are concepts getting more difficult to discover?“.

The positive view, by contrast, is that the efficiency gains will come, however they will take some time. History informs us that economies require time to adjust to brand-new basic function innovations– facilities & & organization designs require to be adjusted, and the abilities to utilize them require to be spread out through the working population. This was the experience with the intro of electrical power to commercial procedures– factories had actually been set up around the requirement to send mechanical power from main steam engines through intricate systems of belts and pulley-blocks to the specific makers, so it took some time to present systems where each maker had its own electrical motor, and the duration of adjustment may even include a short-term decrease in efficiency. Thus, one may anticipate a brand-new innovation to follow a J-shaped curve.

Whether one is an optimist or a pessimist, there are a variety of typical research study concerns that the increase of expert system raises:

- Are we determining efficiency right? How do we determine worth in a world of quick moving innovations?

- How do companies of various sizes adjust to brand-new innovations like AI?

- How essential– and how rate-limiting– is the advancement of brand-new organization designs in profiting of AI?

- How do we drive efficiency enhancements in the general public sector?

- What will be the function of AI in health and social care?

- How do nationwide economies make system-wide shifts? When economies require to make synchronised shifts– for instance net no and digitalisation– how do they engage?

- What organizations are required to support the faster and larger diffusion of brand-new innovations like AI, & & the advancement of the abilities required to execute them?

- Provided the UK’s financial imbalances, how can local development systems be established to increase absorptive capability for brand-new innovations like AI?

A finer-grained analysis of the origins of our efficiency downturn really deepens the brand-new Solow paradox. It ends up that the efficiency downturn has actually been most significant in the most tech-intensive sectors. In the UK, the most mindful decay likewise discovers that it’s the sectors usually considered the majority of tech extensive that have actually added to the downturn– transportation devices (i.e., cars and aerospace), pharmaceuticals, computer system software application and telecoms.

It deserves searching in more information at the case of pharmaceuticals to see how the guarantee of AI may play out. The decrease in efficiency of the pharmaceutical market follows a number of years in which, internationally, the efficiency of R&D– revealed as the variety of brand-new drugs gave market per $billion of R&D– has actually been falling greatly.

There’s no clearer signal of the guarantee of AI in the life sciences than the reliable service of among the most essential essential issues in biology– the protein folding issue– by Deepmind’s program AlphaFold. Lots of proteins fold into a distinct 3 dimensional structure, whose exact information identify its function– for instance in catalysing chain reactions. This three-dimensional structure is identified by the (one-dimensional) series of various amino acids along the protein chain. Provided the series, can one anticipate the structure? This issue had actually withstood theoretical service for years, however AlphaFold, utilizing deep finding out to develop the connections in between series and numerous experimentally identified structures, can now anticipate unidentified structures from series information with excellent precision and dependability.

Provided this success in an essential issue from biology, it’s natural to ask whether AI can be utilized to accelerate the procedure of establishing brand-new drugs– and not unexpected that this has actually triggered a rush of cash from investor. Among the most high profile start-ups in the UK pursuing this is BenevolentAI, drifted on the Amsterdam Euronext market in 2021 with EUR1.5 billion assessment.

Previously this year, it was reported that BenevolentAI was laying off 180 personnel after among its drug prospects stopped working in stage 2 scientific trials. Its share cost has actually plunged, and its market cap now stands at EUR90 million. I have actually no factor to believe that BenevolentAI is anything however a well run business using numerous outstanding researchers, and I hope it recuperates from these problems. However what lessons can be gained from this frustration? Considered that AlphaFold was so effective, why has it been more difficult than anticipated to utilize AI to increase R&D efficiency in the pharma market?

2 aspects made the success of AlphaFold possible. To start with, the issue it was attempting to fix was effectively specified– provided a specific direct series of amino acids, what is the 3 dimensional structure of the folded protein? Second of all, it had a big corpus of well-curated public domain information to deal with, in the kind of experimentally identified protein structures, created through years of operate in academic community utilizing x-ray diffraction and other strategies.

What’s been the issue in pharma? AI has actually been important in producing brand-new drug prospects– for instance, by recognizing particles that will suit specific parts of a target protein particle. However, according to pharma expert Jack Scannell [1], it isn’t recognizing prospect particles that is the rate restricting action in drug advancement. Rather, the issue is the absence of screening strategies and illness designs that have great predictive power.

The lesson here, then, is that AI is great at the resolving the issues that it is well adjusted for– well postured issues, where there exist huge and well-curated datasets that cover the issue area. Its contribution to general efficiency development, however, will depend upon whether those AI-susceptible parts of the general issue remain in truth the rate-limiting actions.

So how is the scenario altered by the enormous effect of big language designs? This brand-new innovation– ” generative pre-trained transformers”— includes text forecast designs based upon developing analytical relationships in between the words discovered in an enormously multi-parameter regression over a large corpus of text[3] This has, in result, automated the production of possible, though acquired and not completely reputable, prose.

Naturally, sectors for which this is the stock-in-trade feel threatened by this advancement. What’s definitely clear is that this innovation has actually basically fixed the issue of maker translation; it likewise raises some remarkable essential problems about the deep structure of language.

What locations of financial life will be most impacted by big language designs? It’s currently clear that these tools can considerably accelerate composing computer system code. Any sector in which it is essential to produce boiler-plate prose, in marketing, regular legal services, and management consultancy is most likely to be impacted. Likewise, the assimilation of big files will be helped by the abilities of LLMs to offer summaries of complicated texts.

What does the future hold? There is a really intriguing conversation to be had, at the crossway of innovation, biology and eschatology, about the potential customers for ” synthetic basic intelligence”, however I’m not going to take that on here, so I will concentrate on the near term.

We can anticipate more enhancements in big language designs. There will unquestionably be enhancements in effectiveness as strategies are improved and the essential understanding of how they work is enhanced. We’ll see more specialised training sets, that may enhance the (presently rather unstable) dependability of the outputs.

There is one concern that may show restricting. The fast enhancement we have actually seen in the efficiency of big language designs has actually been driven by rapid boosts in the quantity of computer system resource utilized to train the designs, with empirical scaling laws emerging to permit projections. The expense of training these designs is now determined in $100 millions– with associated energy usage beginning to be a substantial contribution to international carbon emissions. So it is very important to comprehend the degree to which the expense of computer system resources will be a restricting element on the more advancement of this innovation.

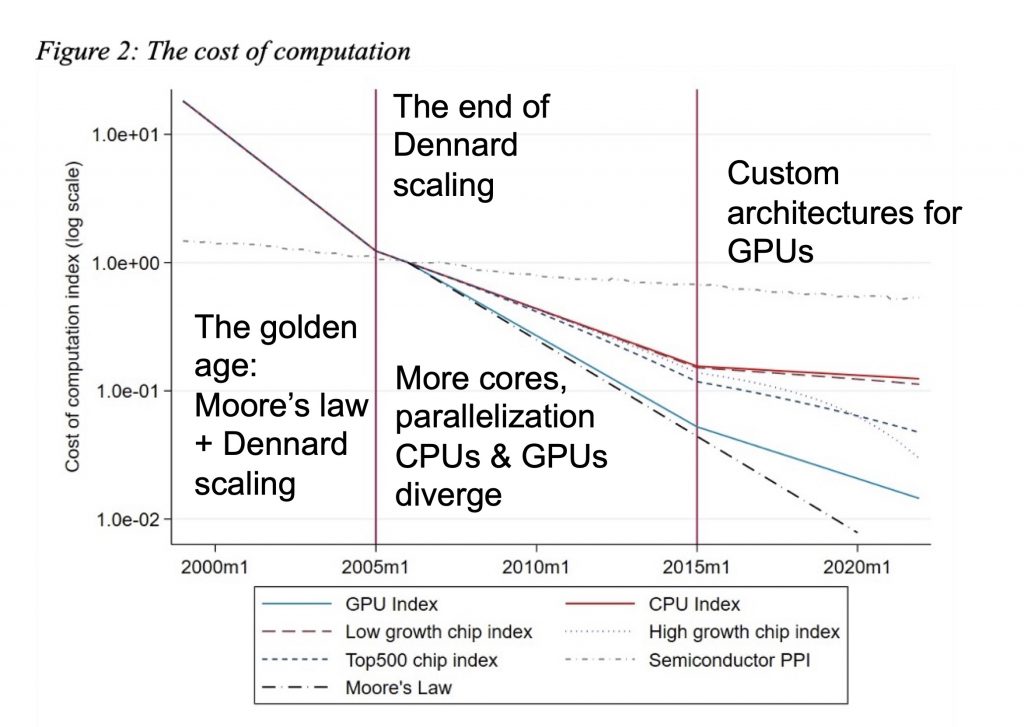

As I have actually gone over before, the rapid boosts in computer system power offered to us by Moore’s law, and the matching reductions in expense, started to slow in the mid-2000’s. A current detailed research study of the expense of computing by Diane Coyle and Lucy Hampton puts this in context[2] This is summed up in the figure listed below:

The expense of calculating with time. The strong lines represent finest fits to a really substantial information set gathered by Diane Coyle and Lucy Hampton; the figure is drawn from their paper [2]; the annotations are my own.

The extremely specialised incorporated circuits that are utilized in substantial numbers to train LLMs– such as the H100 graphics processing systems developed by NVIdia and made by TSMC that are the essential of the AI market– remain in a routine where efficiency enhancements come less from the increasing transistor densities that offered us the golden era of Moore’s law, and more from incremental enhancements in task-specific architecture style, together with merely increasing the variety of systems.

For more than 2 centuries, human cultures in both east and west have actually utilized abilities in language as a signal for larger capabilities. So it’s not unexpected that big language designs have actually taken the creativity. However it is very important not to error the map for the area.

Language and text are extremely essential for how we arrange and work together to jointly attain typical objectives, and for the method we maintain, send and develop on the amount of human understanding and culture. So we should not ignore the power of tools which assist in that. However similarly, a lot of the restraints we deal with need direct engagement with the real world– whether that is through the requirement to get the much better understanding of biology that will permit us to establish brand-new medications better, or the capability to produce plentiful no carbon energy. This is where those other locations of artificial intelligence– pattern acknowledgment, discovering relationships within big information sets– might have a larger contribution.

Fluency with the composed word is an essential ability in itself, so the enhancements in efficiency that will originate from the brand-new innovation of big language designs will occur in locations where speed in producing and taking in prose are the rate restricting action in the procedure of producing financial worth. For artificial intelligence and expert system more commonly, the rate at which efficiency development will be improved will depend, not simply on advancements in the innovation itself, however on the rate at which other innovations and other organization procedures are adjusted to make the most of AI.

I do not believe we can anticipate big language designs, or AI in basic, to be a magic bullet to immediately fix our efficiency despair. It’s an effective brand-new innovation, however when it comes to all brand-new innovations, we need to discover the locations in our financial system where they can include the most worth, and the system itself will take some time to adjust, to make the most of the possibilities the brand-new innovations provide.

These notes are based upon a casual talk I offered on behalf of the Efficiency Institute. It benefitted a lot from conversations with Bart van Ark. The viewpoints, however, are totally my own and I would not always anticipate him to concur with me.

[1] J.W. Scannell, Eroom’s Law and the decrease in the efficiency of biopharmaceutical R&D,

in Expert System in Science Difficulties, Opportunities and the Future of Research Study

[2] Diane Coyle & & Lucy Hampton, Twenty-first century development in computing.

[3] For a semi-technical account of how big language designs work, I discovered this piece by Stephen Wolfram extremely handy: What is ChatGPT doing … and why does it work?