Developing robotics that show robust and vibrant mobility abilities, comparable to animals or human beings, has actually been an enduring objective in the robotics neighborhood. In addition to finishing jobs rapidly and effectively, dexterity enables legged robotics to move through intricate environments that are otherwise challenging to pass through. Scientists at Google have actually been pursuing dexterity for several years and throughout different kind aspects Yet, while scientists have actually made it possible for robotics to trek or dive over some challenges, there is still no normally accepted standard that adequately determines robotic dexterity or movement. On the other hand, standards are driving forces behind the advancement of artificial intelligence, such as ImageNet for computer system vision, and OpenAI Health Club for support knowing (RL).

In “ Barkour: Benchmarking Animal-level Dexterity with Quadruped Robots“, we present the Barkour dexterity standard for quadruped robotics, in addition to a Transformer– based generalist mobility policy. Motivated by pet dexterity competitors, a legged robotic should sequentially show a range of abilities, consisting of relocating various instructions, passing through unequal surfaces, and leaping over challenges within a restricted timeframe to effectively finish the standard. By supplying a varied and difficult barrier course, the Barkour standard motivates scientists to establish mobility controllers that move quick in a manageable and flexible method. Moreover, by connecting the efficiency metric to genuine pet efficiency, we offer an instinctive metric to comprehend the robotic efficiency with regard to their animal equivalents.

| We welcomed a handful of dooglers to attempt the barrier course to guarantee that our dexterity goals were reasonable and difficult. Lap dogs finish the barrier course in around 10s, whereas our robotic’s normal efficiency hovers around 20s. |

Barkour standard

The Barkour scoring system utilizes a per barrier and a total course target time based upon the target speed of lap dogs in the newbie dexterity competitors (about 1.7 m/s). Barkour ratings vary from 0 to 1, with 1 representing the robotic effectively passing through all the challenges along the course within the designated time of around 10 seconds, the typical time required for a similar-sized pet to pass through the course. The robotic gets charges for avoiding, stopping working challenges, or moving too gradually.

Our basic course includes 4 special challenges in a 5m x 5m location. This is a denser and smaller sized setup than a common pet competitors to enable simple implementation in a robotics laboratory. Starting at the start table, the robotic requires to weave through a set of poles, climb up an A-frame, clear a 0.5 m long jump and after that step onto completion table. We picked this subset of challenges since they check a varied set of abilities while keeping the setup within a little footprint. As holds true genuine pet dexterity competitors, the Barkour standard can be quickly adjusted to a bigger course location and might include a variable variety of challenges and course setups.

Knowing nimble mobility abilities

The Barkour standard includes a varied set of challenges and a postponed benefit system, which present a substantial obstacle when training a single policy that can finish the whole barrier course. So in order to set a strong efficiency standard and show the efficiency of the standard for robotic dexterity research study, we embrace a student-teacher structure integrated with a zero-shot sim-to-real method. Initially, we train private professional mobility abilities (instructor) for various challenges utilizing on-policy RL techniques. In specific, we take advantage of current advances in massive parallel simulation to gear up the robotic with private abilities, consisting of walking, slope climbing, and leaping policies.

Next, we train a single policy (trainee) that carries out all the abilities and shifts in between by utilizing a student-teacher structure, based upon the professional abilities we formerly trained. We utilize simulation rollouts to produce datasets of state-action sets for each one of the professional abilities. This dataset is then distilled into a single Transformer-based generalist mobility policy, which can manage different surfaces and change the robotic’s gait based upon the viewed environment and the robotic’s state.

|

Throughout implementation, we combine the mobility transformer policy that can carrying out several abilities with a navigation controller that offers speed commands based upon the robotic’s position. Our qualified policy manages the robotic based upon the robotic’s environments represented as an elevation map, speed commands, and on-board sensory details supplied by the robotic.

| Release pipeline for the mobility transformer architecture. At implementation time, a top-level navigation controller guides the genuine robotic through the barrier course by sending out commands to the mobility transformer policy. |

Effectiveness and repeatability are challenging to accomplish when we go for peak efficiency and optimum speed. Often, the robotic may stop working when conquering a barrier in a nimble method. To manage failures we train a healing policy that rapidly gets the robotic back on its feet, permitting it to continue the episode.

Assessment

We assess the Transformer-based generalist mobility policy utilizing custom-made quadruped robotics and reveal that by enhancing for the proposed standard, we get nimble, robust, and flexible abilities for our robotic in the real life. We even more offer analysis for different style options in our system and their effect on the system efficiency.

|

| Design of the custom-made robotics utilized for examination. |

We release both the professional and generalist policies to hardware (zero-shot sim-to-real). The robotic’s target trajectory is supplied by a set of waypoints along the different challenges. When it comes to the professional policies, we change in between professional policies by utilizing a hand-tuned policy changing system that chooses the most ideal policy provided the robotic’s position.

| Common efficiency of our nimble mobility policies on the Barkour standard. Our custom-made quadruped robotic robustly browses the surface’s challenges by leveraging different abilities discovered utilizing RL in simulation. |

We discover that really typically our policies can manage unforeseen occasions or perhaps hardware destruction leading to great typical efficiency, however failures are still possible. As highlighted in the image listed below, in case of failures, our healing policy rapidly gets the robotic back on its feet, permitting it to continue the episode. By integrating the healing policy with a basic walk-back-to-start policy, we have the ability to run repetitive explores very little human intervention to determine the toughness.

| Qualitative example of toughness and healing habits. The robotic journeys and rolls over after heading down the A-frame. This activates the healing policy, which makes it possible for the robotic to return up and continue the course. |

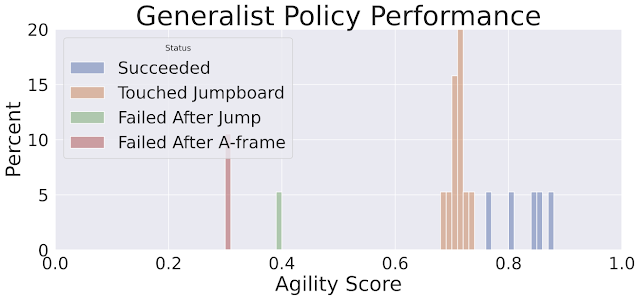

We discover that throughout a a great deal of assessments, the single generalist mobility transformer policy and the professional policies with the policy changing system accomplish comparable efficiency. The mobility transformer policy has a somewhat lower typical Barkour rating, however displays smoother shifts in between habits and gaits.

| Determining toughness of the various policies throughout a a great deal of work on the Barkour standard. |

|

| Pie chart of the dexterity ratings for the mobility transformer policy. The greatest ratings displayed in blue (0.75 – 0.9) represent the runs where the robotic effectively finishes all challenges. |

Conclusion

Our company believe that establishing a criteria for legged robotics is a crucial primary step in measuring development towards animal-level dexterity. To develop a strong standard, we examined a zero-shot sim-to-real method, benefiting from massive parallel simulation and current improvements in training Transformer-based architectures. Our findings show that Barkour is a tough standard that can be quickly tailored, which our learning-based technique for resolving the benchmark offers a quadruped robotic with a single low-level policy that can carry out a range of nimble low-level abilities.

Recommendations

The authors of this post are now part of Google DeepMind. We wish to thank our co-authors at Google DeepMind and our partners at Google Research study: Wenhao Yu, J. Chase Kew, Tingnan Zhang, Daniel Freeman, Kuang-Hei Lee, Lisa Lee, Stefano Saliceti, Vincent Zhuang, Nathan Batchelor, Steven Bohez, Federico Casarini, Jose Enrique Chen, Omar Cortes, Erwin Coumans, Adil Dostmohamed, Gabriel Dulac-Arnold, Alejandro Escontrela, Erik Frey, Roland Hafner, Deepali Jain, Yuheng Kuang, Edward Lee, Linda Luu, Ofir Nachum, Ken Oslund, Jason Powell, Diego Reyes, Francesco Romano, Feresteh Sadeghi, Ron Sloat, Baruch Tabanpour, Daniel Zheng, Michael Neunert, Raia Hadsell, Nicolas Heess, Francesco Nori, Jeff Seto, Carolina Parada, Vikas Sindhwani, Vincent Vanhoucke, and Jie Tan. We would likewise like to thank Marissa Giustina, Ben Jyenis, Gus Kouretas, Nubby Lee, James Lubin, Sherry Moore, Thinh Nguyen, Krista Reymann, Satoshi Kataoka, Trish Blazina, and the members of the robotics group at Google DeepMind for their contributions to the project.Thanks to John Guilyard for developing the animations in this post.