Introduction

We continuously enhance the performance of Rockset and evaluate different hardware offerings to find the one with the best price-performance for streaming ingestion and low-latency queries.

As a result of ongoing performance enhancements, we released software that leverages 3rd Gen Intel® Xeon® Scalable processors, codenamed Ice Lake. With the move to new hardware, Rockset queries are now 84% faster than before on the Star Schema Benchmark (SSB), an industry-standard benchmark for query performance typical of data applications.

While software leveraging Intel Ice Lake contributed to faster performance on the SSB, there have been several other performance enhancements that benefit common query patterns in data applications:

- Materialized Common Table Expressions (CTEs): Rockset materializes CTEs to reduce overall query execution time.

- Statistics-Based Predicate Pushdown: Rockset uses collection statistics to adapt its predicate push-down strategy, resulting in up to 10x faster queries.

- Row-Store Cache: A Multiversion Concurrency Control (MVCC) cache was introduced for the row store to reduce the overhead of meta operations and thereby query latency when the working set fits into memory.

In this blog, we will describe the SSB configuration, results and performance enhancements.

Configuration & Results

The SSB is a well-established benchmark based on TPC-H that captures common query patterns for data applications.

To understand the impact of Intel Ice Lake on real-time analytics workloads, we completed a before and after comparison using the SSB. For this benchmark, Rockset denormalized the data and scaled the dataset size to 100 GB and 600M rows of data, a scale factor of 100. Rockset used its XLarge Virtual Instance (VI) with 32 vCPU and 256 GiB of memory.

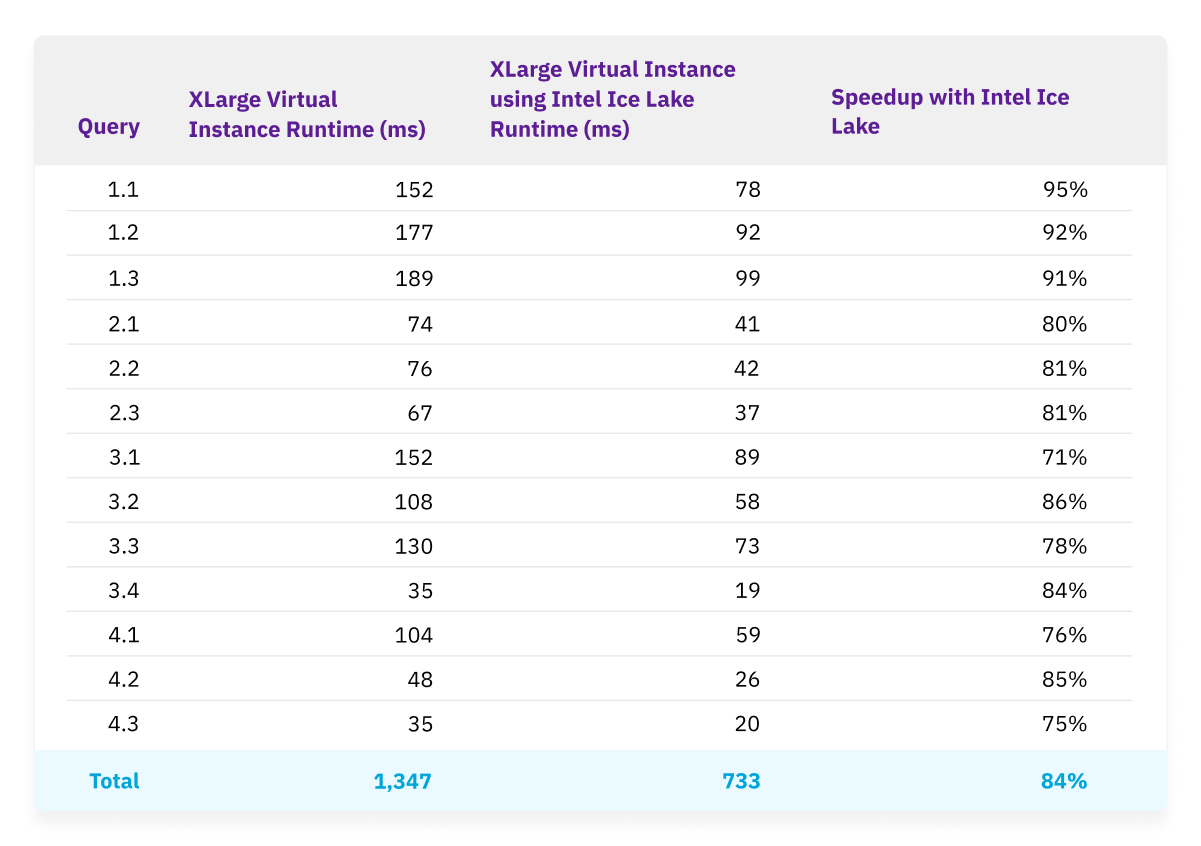

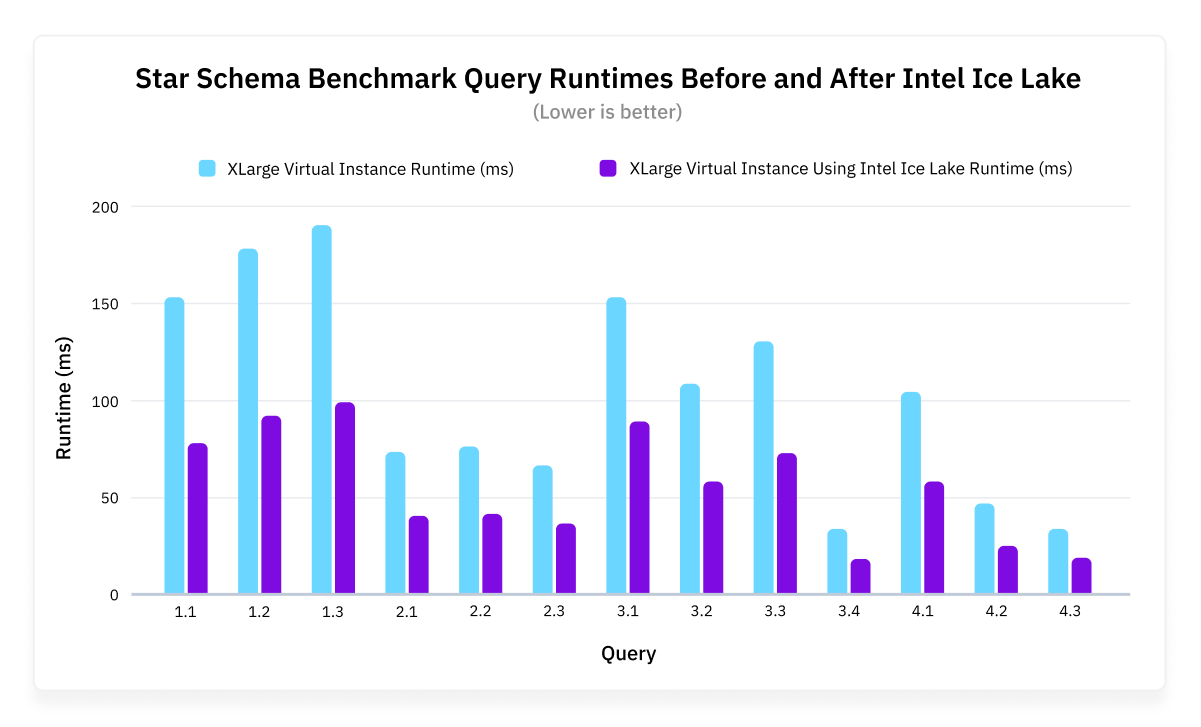

The SSB is a suite of 13 analytical queries. The entire query suite completed in 733 ms on Rockset using Intel Ice Lake compared to 1,347 ms before, corresponding to a 84% speedup overall. From the benchmarking results, Rockset is faster using Intel Ice Lake in all of the 13 SSB queries and was 95% faster on the query with the largest speedup.

Figure 1: Chart comparing Rockset XLarge Virtual Instance runtime on SSB queries before and after using Intel Ice Lake. The configuration is 32 vCPU and 256 GiB of memory.

Figure 2: Graph showing Rockset XLarge Virtual Instance runtime on SSB queries before and after using Intel Ice Lake.

We applied clustering to the columnar index and ran each query 1000 times on a warmed OS cache, reporting the mean runtime. There was no form of query results caching used for the evaluation. The times are reported by Rockset’s API Server.

Rockset Performance Enhancements

We highlight several performance enhancements that provide better support for a range of query patterns found in data applications.

Materialized Common Table Expressions (CTEs)

Rockset materializes CTEs to reduce overall query execution time.

CTEs or subqueries are a common query pattern. The same CTE is often used multiple times in query execution, causing the CTE to be rerun and adding to overall execution time. Below is a sample query where a CTE is referenced twice:

WITH maxcategoryprice AS

(

SELECT category,

Max(price) max_price

FROM products

GROUP BY category ) hint(materialize_cte = true)

SELECT c1.category,

sum(c1.amount),

max(c2.max_price)

FROM ussales c1

JOIN maxcategoryprice c2

ON c1.category = c2.category

GROUP BY c1.category

UNION ALL

SELECT c1.category,

sum(c1.amount),

max(c2.max_price)

FROM eusales c1

JOIN maxcategoryprice c2

ON c1.category = c2.category

GROUP BY c1.category

With Materialized CTEs, Rockset executes a CTE only once and caches the results to reduce resource consumption and query latency.

Stats-Based Predicate Pushdown

Rockset uses collection statistics to adapt its predicate push-down strategy, resulting in up to 10x faster queries.

For context, a predicate is an expression that is true or false, typically located in the WHERE or HAVING clause of a SQL query. A predicate pushdown uses the predicate to filter the data in the query, moving query processing closer to the storage layer.

Rockset organizes data in a Converged Index™, a search index, column-based index and a row store, for efficient retrieval. For highly-selective search queries, Rockset uses its search indexes to locate documents matching predicates and then fetches the corresponding values from the row store.

The predicates in a query may contain broadly selective predicates as well as narrowly selective predicates. With broadly selective predicates, Rockset reads more data from the index, slowing down query execution. To avoid this problem, Rockset introduced stats-based predicate pushdowns that determine if the predicate is broadly selective or narrowly selective based on collection statistics. Only narrowly selective predicates are pushed down, resulting in up to 10x faster queries.

Here is a query that contains both broadly and narrowly selective predicates:

SELECT first name, last name, age

FROM students

WHERE last name= ‘Borthakur’ and age= ‘10’

The last name Borthakur is uncommon and is a narrowly selective predicate; the age 10 is common and is a broadly selective predicate. The stats-based predicate pushdown will only push down WHERE last name = ‘Borthakur’ to speed up execution time.

Row-Store Cache

We designed a Multiversion Concurrency Control (MVCC) cache for the row store to reduce the overhead of meta operations and thereby query latency when the working set fits into memory.

Consider a query of the form:

SELECT name

FROM students

WHERE age = 10

When the selectivity of the predicate is small, we use the search index to retrieve the relevant document identifiers (ie: WHERE age = 10) and then the row store to retrieve document values and their columns (ie: name).

Rockset uses RocksDB as its embedded storage engine, storing documents as key-value pairs (ie: document identifier, document value). RocksDB provides an in-memory cache, called the block cache, that keeps frequently accessed data blocks in memory. A block typically contains multiple documents. RocksDB uses a metadata lookup operation, consisting of an internal indexing technique and bloom filters, to find the block and the position inside the block with the document value.

The metadata lookup operation takes a significant proportion of the working set memory, impacting query latency. Furthermore, the metadata lookup operation is used in the execution of each individual query, leading to more memory consumption in high QPS workloads.

We designed a complementary MVCC cache maintaining a direct mapping from the document identifier to the document value for the row store, bypassing block-based caching and the metadata operation. This improves the query performance for workloads where the working set fits in memory.

The Cloud Performance Differential

We continually invest in the performance of Rockset and making real-time analytics more affordable and accessible. With the release of new software that leverages 3rd Gen Intel® Xeon® Scalable processors, Rockset is now 84% faster than before on the Star Schema Benchmark.

Rockset is cloud-native and performance enhancements are made available to customers automatically without requiring infrastructure tuning or manual upgrades. See how the performance enhancements impact your data application by joining the early access program available this month.